Teach it to interact

Before digital brains can interact with the world, they will need to see and understand their environment and their place in it. All this requires is digitizing several hundred million years of evolution.

One look at human brain activity makes it clear how difficult it is to process vision – more than half of the cortical matter participates in helping us visualize and make sense of the world around us. This ability took more than half a billion years to evolve, but Fei-Fei Li, an associate professor of computer science at Stanford, is working to bring the power of vision to computers in just a few years.

"Vision begins with the eyes, but it truly takes place in the brain," said Li, who is the director of the Stanford Artificial Intelligence Laboratory. "Ultimately, we want to teach machines to see like we do."

TED TalksAt TED2015 in Vancouver, Fei-Fei Li described how her research team is teaching computers to understand pictures and how the technology could one day be applied.

Developing fast, accurate and meaningful visual processing software will be critical to the success of integrating thinking machines into everyday life. This goes far beyond recognizing objects, Li said, and requires identifying people and objects, inferring geometry in three dimensions, and understanding the context of relations, emotions, actions and intentions. Consider an autonomous car that detects a fist-size object on the road: Is it a crumpled paper bag that can be safely driven over, or is it a rock that should be aggressively avoided?

Traditionally, researchers have tried to write algorithms that recognize pre-determined sets of shapes as specific objects. A cat, for instance, is something with a round face, pointy ears and a tail. The natural variations of individual objects, though, would require an impossibly complex index of object classes. Instead, Li realized that she needed to treat computers like toddlers, which learn to see and understand through millions and millions of experiences.

She launched ImageNet, filled it with nearly a billion photos of the real world, and invited users to sort and label the photos. This produced a database of 15 million photos spanning 22,000 classes of objects and described using English words. Li likens the dataset to a small fraction of what a child sees in its first years.

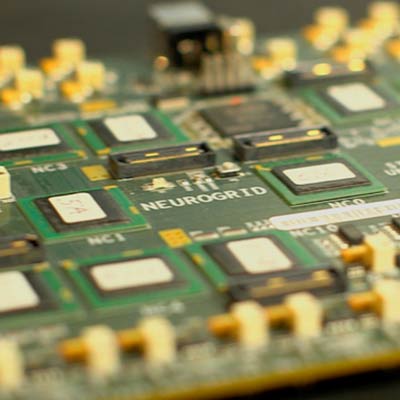

"Now that we had data to nourish the computer brain, we were ready to come back to the algorithms," Li said. She began feeding the data into a machine-learning algorithm developed in the 1980s, called a convolutional neural network, which is structured much like a brain. The data streams into 24 million "nodes," which are organized in hierarchical layers consisting of 15 billion node connections; one node's output is the next node's input, and through this the network quickly identifies objects in an image. Paired with natural language software, Li's algorithm can now "look" at a photo and, in most cases, describe it in full sentences with astonishing accuracy.

Li said that AI vision is still in its infancy. Novel scenes sometimes still throw it for a loop, and Li's lab is currently working to improve how computers reason and comprehend what they’re seeing in a complex environment. Li envisions smart cameras that can raise an alert if they recognize a swimmer is drowning, or can observe medical procedures and notify surgeons of potential unseen complications.

"Little by little we're giving sight to machines," Li said. "First we teach them to see, then they help us to see better."