Stanford’s Robot Makers: Allison Okamura

Allison Okamura is a professor of mechanical engineering at Stanford University and leads the CHARM lab. Her lab does research on haptics – bringing the sense of touch to robotics – and robotics applications in medicine, including surgery, prosthetics and rehabilitation. This Q&A is one of five featuring Stanford faculty who work on robots as part of the project Stanford’s Robotics Legacy.

What inspired you to take an interest in robots?

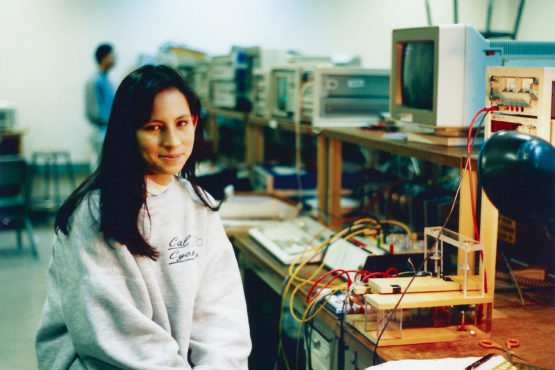

Okamura with her first mechatronics/robotics project as an undergraduate at Berkeley (circa 1994). (Image credit: Courtesy Allison Okamura)

I wasn’t one of those kids that always loved robots. When I was an undergraduate at UC Berkeley, I had some classes in mechatronics systems – devices that combine mechanical and electrical systems and programming. But the first thing that really sparked my interest in robotics is when I was a senior at UC Berkeley and Mark Cutkosky (professor of mechanical engineering) contacted me. He congratulated me on a fellowship I had won and said he hoped I would come to Stanford. I asked what he worked on in his lab and he explained that they make robot fingers and hands, which caught my interest. The rest is history from there.

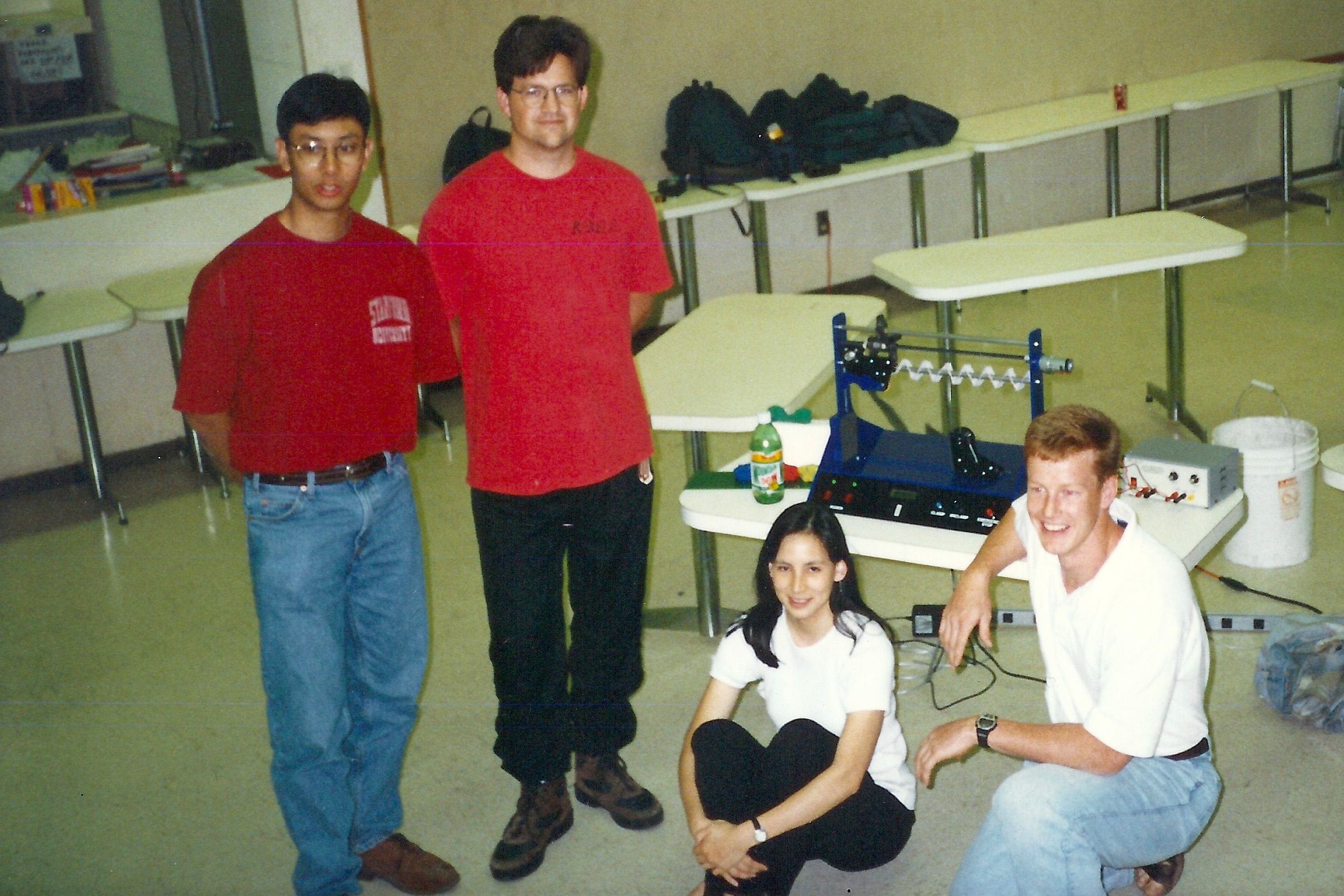

What was your first robotics project?

My first robotics project was in Mark Cutkosky’s lab. It was using robot fingers and sensors embedded within them to manipulate objects. I thought that was something that should’ve been a solved problem. You know, you see robots in the movies all the time doing these kinds of tasks. I learned how challenging that is, both from the mechanical design point of view and the control point of view and the programming point of view. I also got a good perspective on how incapable robots were at that point.

My first robotics research paper came from that work. It was about the idea of moving a robot’s sensors over the object to find features that would help you understand what the object is and manipulate it.

Okamura with her ME 218 project group in spring 1995. (Image credit: Courtesy Allison Okamura)

Thinking back, what did you hope robots would be doing in the “future”?

When I was a graduate student, I definitely thought that this problem of robots being able to manipulate with their hands would be solved. I thought that a robot would be able to pick up and manipulate an object, it would be able to write gracefully with a pen, and it would be able to juggle.

In a sense, robots can do much more than they could when I was a student but it has not reached the level that I had expected. That’s in large part because of the physical constraints and limitations of robots compared to those of the human body. So now there’s a resurgence of thinking about new ways to be clever about the physics in designing robots.

What are you working on now related to robotics?

In a way, my first robotics project got me interested in the role of touch but, although it seems quite related, I feel like I’m in a very different area of research now. I don’t really study how robots can touch and manipulate but how a human can control a remote robot to touch and manipulate. That has a lot to do with stimulating and giving feedback to the human operator, rather than the robot doing things autonomously.

When I was a PhD student, I worked part-time at a startup company that was all about providing artificial touch feedback to people, such as in virtual environments, on mobile devices or in cars. The combination of what I had done for my thesis and what I did when I was working part-time at this startup merged into my research interest today.

We design haptic devices wearable on the human body that let you feel things in the virtual environment. These can also let you tele-operate a remote robot and feel what it’s feeling. In virtual reality, you are modeling the real world and because we don’t know everything about the real world, it’s not perfect. We’re learning about how people act in these somewhat sensory-deprived environments, like what’s important to display and sense. Haptic devices are a component of providing those sensory-motor experiences in the virtual world.

How have the big-picture goals or trends of robotics changed in the time that you’ve been in this field?

Okamura, left, celebrates a successful project with freshmen Brad Immel and Tiger Sun who worked on a team with Jonathan Sosa to create a virtual gear shifter. (Image credit: L.A. Cicero)

There’s much more deep interest in the social nature of robots and understanding how the robots impact our daily lives. People are asking: How do humans stay in the loop – play a role in an artificially intelligent system – rather than just turning things over to autonomous robots?

In the realm of mechanical design, there’s this resurgence of creativity that I find really exciting. More people are thinking about how to be clever about mechanical design to solve problems that we don’t necessarily want to turn over to computation. For example, thinking about soft robots, which is a relatively new research interest of mine.

On the flip side, of course, new methods for machine learning are showing a lot of promise and there’s a lot of interest in that. The caveat is that, when it comes to using these learning methods with physical robots – as opposed to recognizing cats on the internet, which is how some are tested – making these concepts work is much more difficult. Progress is being made but it’s an open area of research that lots of people are excited about.