First-of-its-kind Stanford machine learning tool streamlines student feedback process for computer science professors

Stanford professors develop and use an AI teaching tool that can provide feedback on students’ homework assignments in university-level coding courses, a previously laborious and time-consuming task.

This past spring, Stanford University computer scientists unveiled their pandemic brainchild, Code In Place, a project where 1,000 volunteer teachers taught 10,000 students across the globe the content of an introductory Stanford computer science course.

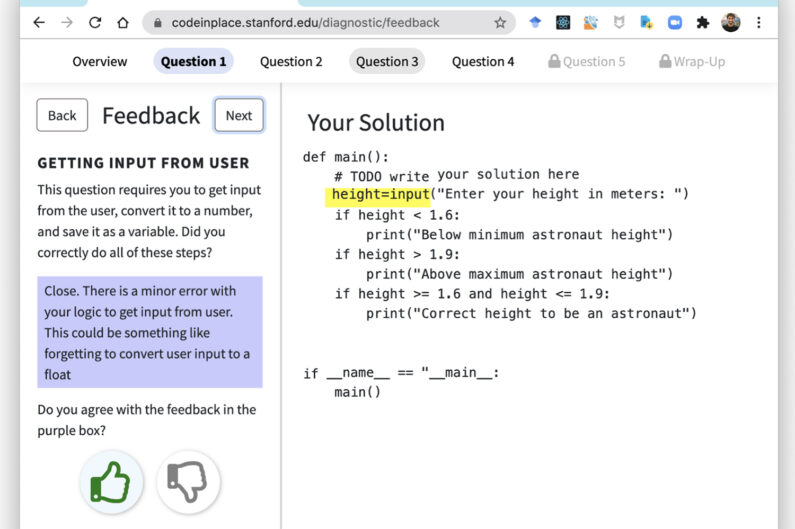

Students in Code In Place evaluated the feedback they received using this carefully designed user interface. (Image credit: Code In Place 2021)

While the instructors could share their knowledge with hundreds, even thousands, of students at a time during lectures, when it came to homework, large-scale and high-quality feedback on student assignments seemed like an insurmountable task.

“It was a free class anyone in the world could take, and we got a whole bunch of humans to help us teach it,” said Chris Piech, assistant professor of computer science and co-creator of Code In Place. “But the one thing we couldn’t really do is scale the feedback. We can scale instruction. We can scale content. But we couldn’t really scale feedback.”

To solve this problem, Piech worked with Chelsea Finn, assistant professor of computer science and of electrical engineering, and PhD students Mike Wu and Alan Cheng to develop and test a first-of-its-kind artificial intelligence teaching tool capable of assisting educators in grading and providing meaningful, constructive feedback for a high volume of student assignments.

Their innovative tool, which is detailed in a Stanford AI Lab blogpost, exceeded their expectations.

Teaching the AI tool

In education, it can be difficult to get lots of data for a single problem, like hundreds of instructor comments on one homework question. Companies that market online coding courses are often similarly limited, and therefore rely on multiple-choice questions or generic error messages when reviewing students’ work.

“This task is really hard for machine learning because you don’t have a ton of data. Assignments are changing all the time, and they’re open-ended, so we can’t just apply standard machine learning techniques,” said Finn.

The answer to scaling up feedback was a unique method called meta-learning, by which a machine learning system can learn about many different problems with relatively small amounts of data.

“With a traditional machine learning tool for feedback, if an exam changed, you’d have to retrain it, but for meta-learning, the goal is to be able to do it for unseen problems, so you can generalize it to new exams and assignments as well,” said Wu, who has studied computer science education for over three years.

The group found it much easier to get a little bit of data, like 20 pieces of feedback, on a large variety of problems. Using data from previous iterations of Stanford computer science courses, they were able to achieve accuracy at or above human level on 15,000 student submissions; a task not possible just one year earlier, the researchers remarked.

Real-world testing

The language used by the tool was very carefully crafted by the researchers. They wanted to focus on helping students grow, rather than just grading their work as right or wrong. The group credited “the human in the loop” and their focus on human involvement during development as essential to the positive reception to the AI tool.

Students in Code In Place were able to rate their satisfaction with the feedback they received, but without knowing whether the AI or their instructor had provided it. The AI tool learned from human feedback on just 10% of the total assignments and reviewed the remaining ones with 98% student satisfaction.

“The students rated the AI feedback a little bit more positively than human feedback, despite the fact that they’re both as constructive and that they’re both identifying the same number of errors. It’s just when the AI gave constructive feedback, it tended to be more accurate,” noted Piech.

Thinking of the future of online education and machine learning for education, the researchers are excited about the possibilities of their work.

“This is bigger than just this one online course and introductory computer science courses,” said Finn. “I think that the impact here lies substantially in making this sort of education more scalable and more accessible as a whole.”

Mike Wu is advised by Noah Goodman, associate professor of psychology and of computer science, who was also a member of this research team. Finn, Goodman and Piech are affiliates of the Institute for Human-Centered Artificial Intelligence (HAI). Goodman is also a member of the Wu Tsai Neurosciences Institute.

This project was funded by the Human-Centered Artificial Intelligence (HAI) Hoffman Yee Grant.

To read all stories about Stanford science, subscribe to the biweekly Stanford Science Digest.