Stanford’s Robot Makers: Oussama Khatib

Oussama Khatib is a professor of computer science at Stanford University and leads the Robotics Lab. His projects have included cooperative robots, Romeo and Juliet, and the diving robot, OceanOne. He is also interested in autonomous robots, human-friendly robotics, haptics – bringing the sense of touch to robotics – and virtual and augmented reality research. This Q&A is one of five featuring Stanford faculty who work on robots as part of the project Stanford’s Robotics Legacy.

What inspired you to take an interest in robots?

I have always loved science and started my university education thinking that I was going to travel the world teaching kids about its wonders. As an undergraduate at the school of Sup’Aero in Toulouse, France, I did a final-year project on artificial intelligence. It was a new and appealing topic. As part of that work I recall reading a book by Nils Nilsson of SRI – later to be one of my colleagues – who had contributed to developing Shakey, one of the first mobile robots. I found that final-year project to be so compelling in the opportunities it presented that I decided to do a PhD in robotics.

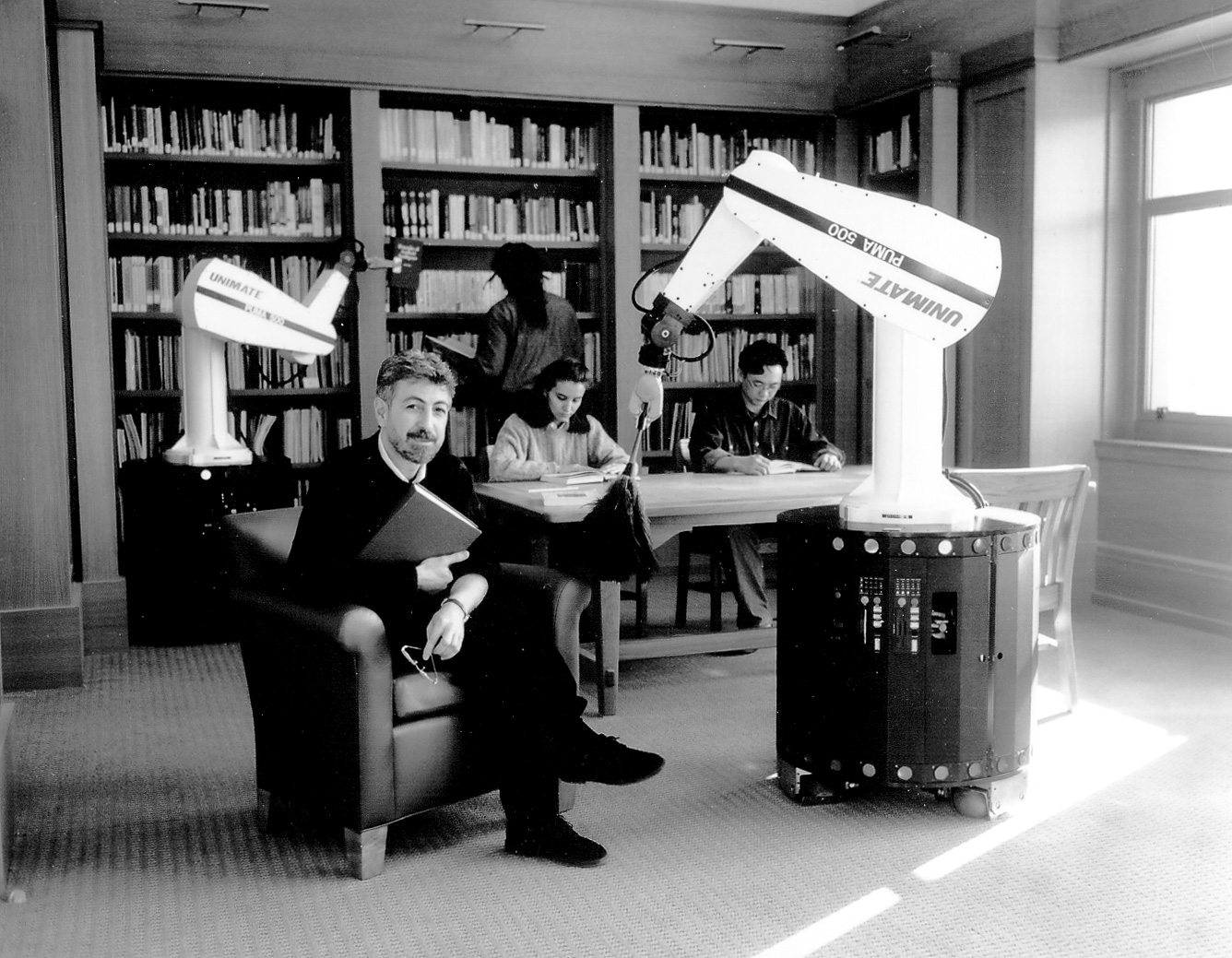

Khatib with Romeo and Juliet, mobile robotic arms that could perform a wide range of tasks and collaborate with each other. (Image credit: L.A. Cicero)

At that stage I was convinced that with a broad enough background in the fundamentals of math, physics and computer science, many new fields were within reach and, with a grasp of these basics, one could go anywhere. Even more, the problems I could see ahead in the real world – such as robotics – were much more exciting than those used in texts for teaching these materials, and it was quite wonderful.

What was your first robotics project?

At the time I began my PhD, researchers were controlling robot movement by computing specific motion trajectories. I thought this was not a good approach since a one-millimeter error in positioning could break something with which a robot hand came into contact. My idea was that we should do this more like humans, where we use senses to see and feel our way to grasping and manipulating objects.

I realized that when humans grab an object, we look at the goal and visually move our hands toward it – like having a magnet that pulls the hand, with the arm following along. To implement this idea in robots, you can create an attractive force to reach goals and a repulsive force to avoid obstacles. These are potential fields. By playing with potential fields, you can avoid collisions while moving in an easy and natural – humanlike – way.

After presenting a paper I wrote on this approach at a conference in Italy – including a beginning formulation of the mathematics and demonstration by simulations – I had the good fortune to be sitting on a conference bus next to Bernie Roth, a professor at Stanford. He told me how he had been impressed by my paper and invited me – then and there – to spend a year at Stanford to explore some of these ideas. When I finished my PhD in France I came to Stanford and with everything Stanford has to offer, found it, of course, impossible to consider leaving. Even now – after all these years – it’s still so exciting, and I feel I’ve been just finishing up my thesis. Large parts of the work have been done in collaboration with my students, surely, who have all contributed much to the theory that has come from deeper study of that original approach.

Thinking back over your own concept of robotics, what did you hope robots would do in “the future”?

Manufacturing helped develop the first generation of robots but, from about the mid-’90s, I began looking more into robots in a human environment. This is really the fun robotics – this is where you aim for having intelligence in the robot’s behavior. This is what I had been after from the beginning.

Having robots interact with humans and the world means relying on sensing, and developing the ability for the robot to perceive, build its own models of its environment, and make decisions about how to move around, make contact, pick things up, etc. My group had always been inspired by the human example but to go further with this requires a more complex understanding than mechanics and computing provide. So, we’ve worked with colleagues in bioengineering and medicine to understand better, to model and then to simulate articulated body systems. One of our goals has been to do this analysis in real time, fast enough to control a real robot as it operates in our environment.

Among many applications, the algorithms and the capabilities we developed will allow us to go back and build robots that can be used in manufacturing. Small and medium enterprises that can’t afford assembly lines can benefit if we are able to bring flexible, collaborative robots that can be integrated easily at low cost in those environments.

What are you working on now related to robotics?

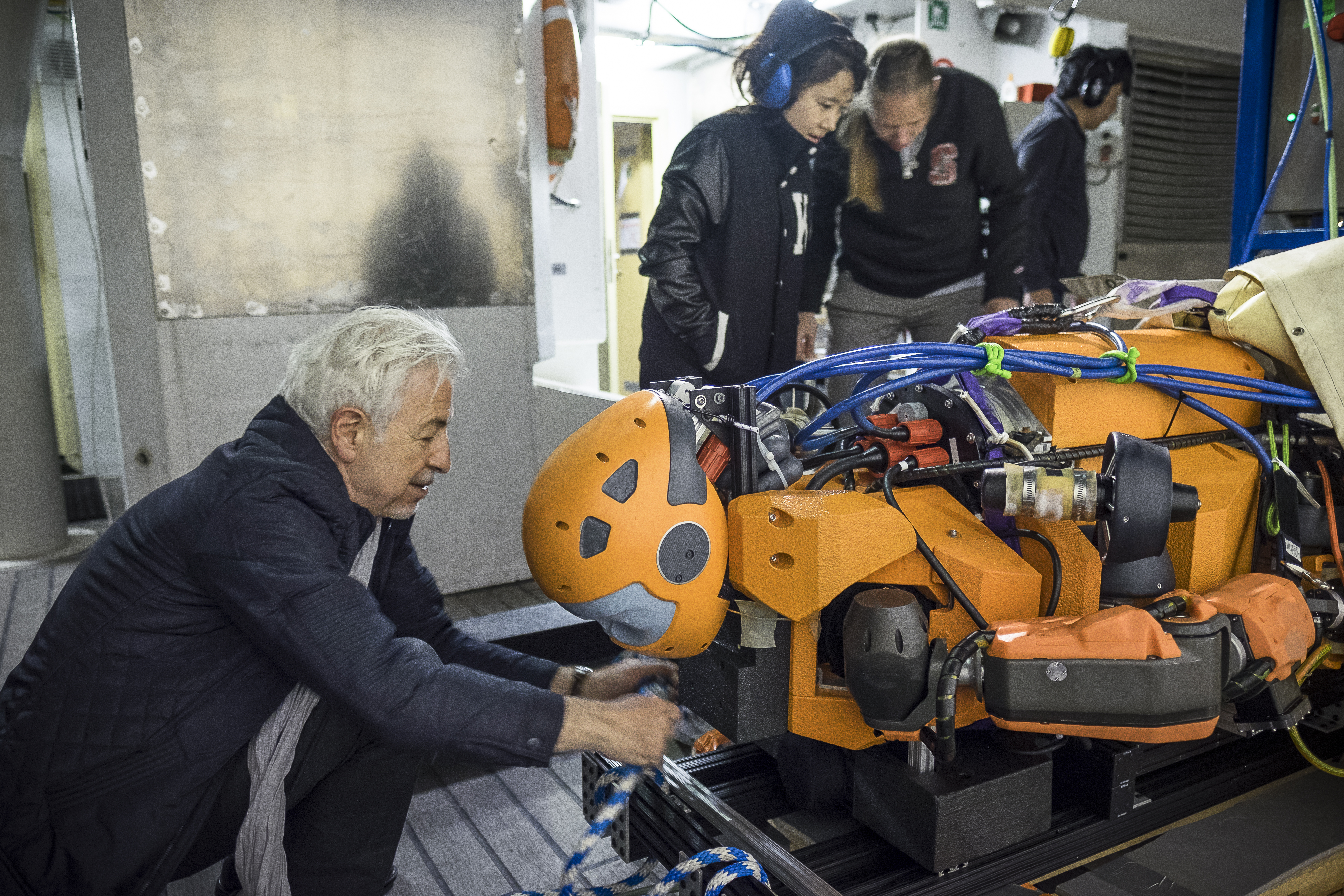

For the past few years, OceanOne has been Khatib’s passion project. (Image credit: Frederic Osada and Teddy Seguin/DRASSM)

We developed OceanOne, a robot that can dive and recover objects at depths where humans can’t operate. We can take OceanOne into the depths of its underwater environment where a human up above can operate it through visual and haptic force feedback, or replace it with a computer simulation, which lets us train for the same operations. In both cases you can feel exactly what the robot feels – that’s the haptic force feedback – and the robot does exactly what you command through your hands. We took OceanOne down to a vessel of King Louis XIV that sank off the coast of France in 1664 and recovered artifacts from its debris field. We also went to the Kolumbo volcano in Santorini to gather samples for geologists.

With this we have an avatar — a robot functionality that we are developing for other tasks such as extracting gold from underwater or in mines without destroying the environment or putting people at risk. We are also working with robots for disaster sites and high-altitude stations.

How have the big-picture goals or trends of robotics changed in the time that you’ve been in this field?

I remember being in Chicago in the early ‘80s at a conference where there were a large number of robotics companies promising to solve all sort of problems in manufacturing. There was a lot of investment at the time, but the technology wasn’t quite there for supporting what they were selling, so the promises fell short and by ’87 there was a big fall of robotics. It was an early version of the dot-com crash.

We eventually began to see robots doing simple manufacturing transfer tasks. These were successful applications because the positioning of all parts was very controlled and there was no uncertainty (i.e., need for advanced sensing) and the tasks weren’t well-suited for humans, such as handling heavy loads or in dangerous environments. They were simple pick and place. You had to isolate these robots from humans – effectively putting them in cages – since they tended to be big, fast and had very little sensing feedback. Nevertheless, in laboratories such as mine people continued to work on developing intelligent robots.

Now in robotics, there is not just physical interaction but cognitive interaction – robots that collaborate with humans. This is because we are now recognizing the need for domestic robots, helpers and systems for human augmentation, for example with our aging society, and are building toward this goal of co-robots – robot systems that collaborate and interact with people. We’re also talking about building collaborative solutions for urban farming and going inside confined spaces where humans cannot go and where, in the past, robots could not go because of their inability to accommodate to uncertainty.

Robotics is multidisciplinary. You need mechanical designers, electrical engineers, computer scientists, psychologists, biologists and others. And the computation power is so critical to making the robot work in an effective manner that lets it interact in real time. It took years for these related technologies to develop, and it is only now that we are certain that we have what it takes to deliver on those promises of decades ago – we are ready to let robots escape from their cages and move into our human environment.